The Zodiac FX from Northbound Networks is an OpenFlow switch designed to deliver the power of Software Defined Networking to researchers, students, and anyone who wants to develop their SDN skills or build applications using real hardware.

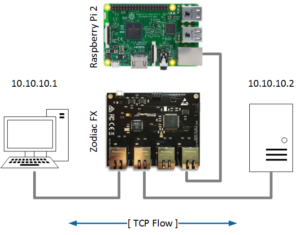

In a previous post, I described the process of connecting the Zodiac FX switch with a Raspberry Pi (single-board computer) that runs an Openflow controller, Ryu. This post provides throughput measurements from the switch when using various Ryu applications.

Applications

The measurement tests compare the throughput of three controller applications:

- Pass Through (PT): This is an application that instructs the switch to forward traffic from port 1 to port 2 and vice versa.

- Simple Switch (SS): This is an application that implements a L2 learning switch using OpenFlow 1.3.

- Simple Switch Re-imagined (SS2): This is an application created by inside-openflow.com to expand on SS by implementing a multi-table flow pipeline. Using multiple tables allows a controller application to implement more logic and state in the switch itself, reducing the load on the controller. This application uses four tables.

- Faucet: This is a controller application that is designed for production networks. Faucet is deployed in various settings, including the Open Networking Foundation, as described here. The application employs a flow pipeline of seven tables.

In addition to the above applications, throughput measurements were also taken in a native mode. In this mode, the Zodiac FX switch works as a traditional L2 switch without a controller.

Setup

To measure the performance of all applications, I connected two PCs running Windows 10 to the ports 1 and 2 of the Zodiac FX. Both PCs have 1G NICs to ensure that they do not introduce a bottleneck for the 100M switch. Traffic for the tests is generated using iPerf3 (v3.1.3). iPerf3 is a tool to measure the maximum achievable bandwidth on IP networks. It tests and reports the bandwidth, loss, and other parameters for TCP and UDP traffic over IPv4 or IPv6.

Test Scenario

The iPerf3 is used to generate four types TCP traffic flows between the two PCs for each of the four applications and the native mode. Each flow is generated for 30 seconds.

Single TCP stream Client to Server (C2S):

>iperf3 -t 30 -c 10.10.10.2

Single TCP stream Server to Client (S2C):

>iperf3 -t 30 -R -c 10.10.10.2

Five parallel TCP streams Client to Server (C2SP5):

>iperf3 -t 30 -P 5 -c 10.10.10.2

Five parallel TCP streams Server to Client (S2CP5):

>iperf3 -t 30 -R -P 5 -c 10.10.10.2

The switch configuration parameters used for the experiments are:

- Openflow Status: Enabled

- Failstate: Secure

- Force OpenFlow version: Disabled

- EtherType Filtering: Enabled

Note that in the Native mode, all ports are assigned to VLAN 100, which has the type ‘Native’. Note also that the EtherType Filtering is enabled to discard packets that do not include a valid EtherType. In the default setting (Disabled), the controller creates entries in the flow table in response to receiving frames with invalid EtherType. These entries are superfluous and cause the switch to crash when the size of the table grows too large.

Results

The table below summarizes the results of all tests. The tests use the latest firmware version v8.1 (as of July 1, 2017).

| Flow Type \ Test | Native | PT | SS | SS2 | Faucet |

| C2S | 94.8 | 59.1 | 59.3 | 6.6 | 4.0 |

| S2C | 94.9 | 75.1 | 74.0 | 7.7 | 4.2 |

| C2SP5 | 95.0 | 72.4 | 68.6 | 16.4 | 8.5 |

| S2CP5 | 95.2 | 88.3 | 89.7 | 17.3 | 12.4 |

Conclusions

The results show that the performance of the switch drops significantly when the switch is running as an OpenFlow switch when compared to the traditional switch mode. The performance drops even further for the applications that use more than one flow table. Since the test environment is very simple and it consists of only one flow (the TCP flow between two PCs), I speculate that the performance drop is related to the size of the OpenFlow pipeline (1, 1, 4, and 7, respectively.) A larger pipeline requires more processing for each packet and more entries matching in each table. Interestingly, the performance of the switch in iPerf3 reverse mode (server to client) is higher than the forward direction. Moreover, the performance when parallel TCP streams are generated is significantly higher than single streams, especially for the multi-table applications. These results require more investigations to determine the cause.

Acknowledgments

I would like to thank Paul Zanna, Founder and CEO of Northbound Networks, for suggesting adding the Pass Through test to eliminate the overhead of any packet in/out calls between the switch and the controller.